Considering Actor-Critic for handling the decision making of agents, my colleague and I started an interesting discussion about policy gradient methods in reinforcement learning. To clarify the concepts of our discussion, I summarised the lesson I learned in this short post.

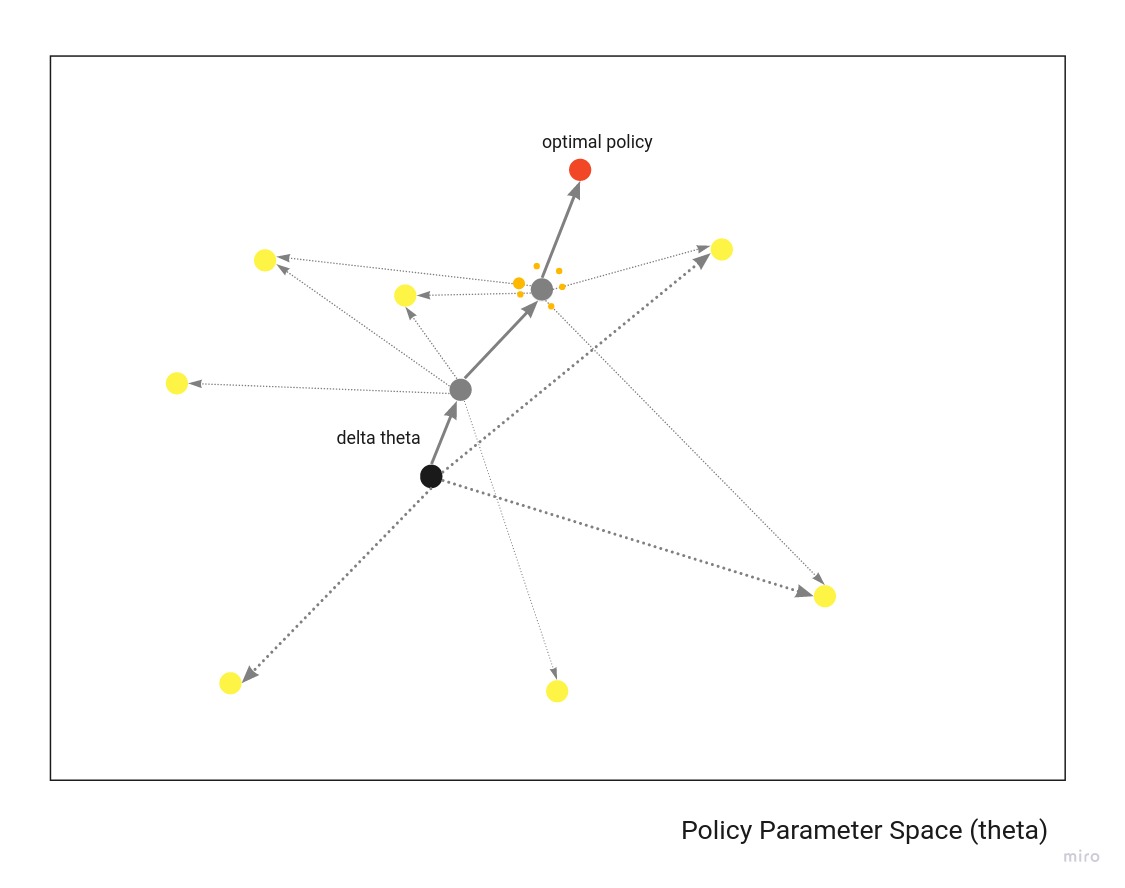

Consider The Policy Parameter Space.

The general idea of policy gradient methods is to compute the gradient with respect to the parameters of the policy. However, in order to do that, we need to know the reward function and the transition function of the policy. Using a basic approach, we try different directions by randomly guessing policies and further evaluating the policies that we get.

If we sample enough directions, we will eventually get to the best direction (or close enough to it).

The learning rate helps us to test closer policies instead of moving completely towards a new policy. Why do policy gradient is not so easy in RL? The advantage of the policy gradient is that we don’t need exploration as in classic RL problems. The disadvantages are that (1) we need to compute many directions to approximate the gradient and (2) we are never certain that we have found the best direction for updating our policies.

Actor-Critic algorithms try to address this problem by limiting the number of policies to evaluate;

- The “Critic” part of the algorithm, that could be the action-value (the Q value) or the state-value (the V value), approximate the quality of the new policies.

- The “Actor” updates the policy distribution in the direction suggested by the “Critic”.

Regarding Actor-Critic algorithms, I found the following two posts interesting and easy to understand: